Bibliometrics: Making Sense of Citations

Module developed by Michael Dietrich from the Department of History and Philosophy of Science at the University of Pittsburgh

This module will introduce basic techniques in bibliometrics, such as citation analysis, citation impact factors, and bibliometric networks. You will consider the application of these kinds of methods for analysis of research quantity and quality. In addition to learning bibliographic methods, you will consider the limits of this form of analysis. Specifically you will confront and question the “publish or perish” model of academic knowledge production which places significant value on a single kind of scholarly output, and even more value on the percieved circulation of that output. Of course, you will not perish, if you do not publish. There is more to life than academic publishing.

WATCH

DO

In this exercise, you will generate impact metrics for a series of scholars. This work will introduce you to ideas such as citation analysis, impact factors, and bibliometric networks. You will reflect on the affordances and limitations of these methods to determine the value of scholarly work. To complete this exercise you will need a computer with an internet connection, access to Google Scholar, and the free software Publish or Perish. The estimated time to complete this assignment is 20-30 minutes.

Exercise Download

EXPLORE

Try Other Databases

Repeat your searches with Google Scholar Profile selected as your database. These profiles can be curated for accuracy by the subject. Compare the Google Scholar and Google Scholar Profile results. Are the results the same? If not, which database yields higher results? Now repeat your searches with different databases. (PubMed is open to all. Other databases may require registration.) Are the results the same? Do they vary significantly by field or the scholar’s seniority? Why or why not? Finally, Repeat your searches with scholars from the same field and the same seniority using a single database. Do the results vary widely across individuals? Try these same searches using different databases. Is there more or less variability in the results?

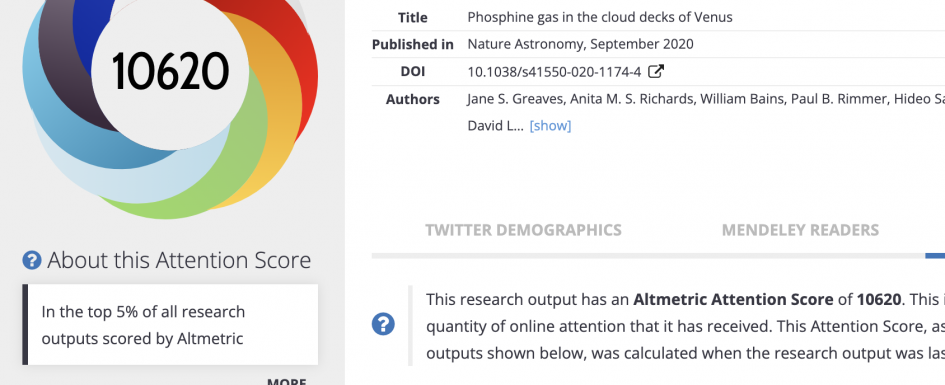

Examine Altmetrics

In addition to H-factors, there are several companies that collect alternative metrics. These companies include Plum Analytics, Altmetric.com, and ImpactStory among others. Explore what alternative metrics exist and consider what impact they are trying to measure.

GUIDING REALIZATIONS

- Data Sources Matter. Some fields are better represented in certain databases. Databases that exclude books and journals from some fields will not provide accurate guides to either publication or citation in those fields. Databases that include only certain publication languages and regions will also be biased in their scholarly representation.

- Data Context Matters. Citing practices, numbers of publications, and the size of the scholarly community varies widely by field. (Not to mention that “scholarly fields” are not always well defined with sharp boundaries.) Citation metrics cannot be compared across fields without some normalization for the significant differences within and between fields.

- Data Requires Interpretation. Citation metrics do not necessarily reflect judgements of the intellectual quality of the work or author cited. Citations can be approving, disapproving, or neutral. You need to read the citing article to try to glean why an article is cited, and there can be many reasons for citation that have little to do quality or even content.

- Your Data is Not Your Own. Major publication databases, such as Google Scholar and Web of Science, offer very limited opportunities for scholars to curate their publication record. Other publication resources allow more curation, but usually that curation is limited to publication metadata, not to how your publication has been cited by others.

Additional Resources

- MacRoberts, Michael H., and Barbara R. MacRoberts. “The Mismeasure of Science: Citation Analysis.” Journal of the Association for Information Science and Technology 69, no. 3 (2018): 474-482.

- Hicks, Diana, et al. “The Leiden Manifesto for Research Metrics.” Nature 520 (2015): 429–431.

- Van Eck, N.J., & Waltman, L. (2014). “Visualizing bibliometric networks.” In Y. Ding, R. Rousseau, & D. Wolfram (Eds.), Measuring scholarly impact: Methods and practice (pp. 285–320). Springer.